Introduction

Almost no one can imagine our life without presentations and demos via videoconferences. With this in mind, think about the case when you are going along the street or on the train to another city. Meanwhile, you have to attend a video conference. Suddenly you have a small internet bandwidth. That small that you cannot view the presentation and hear the voices without corruption. The possible solution will be to switch to so-called «text-only» mode.

There is no such mode yet, but let’s outline a concept of the alternative GUI for, let’s say, Google Meet. In this mode, you can read the presentation slides and the voices transcribed in the correct order.

The possible use case is to review all the information in close to real-time delay because text information is not that heavy. In addition, you may review the details you have missed because of the bad internet in the chat manner. And, hopefully, after a certain time, you may get the good internet back. From there on you continue looking at the presentation by switching back to the normal mode.

Another non-obvious advantage is a clear and precise text meeting summary because only some encounter such network issues.

During this article, we will focus on the process of correctly transcribing presentations using OpenAI API models.

The idea behind the scene

Transcription is an easy task using the power of the existing OCR techniques. Yeah, it is mostly the case, but the problems arise when we have rearranged blocks of text on the image. Often it’s almost impossible to order text correctly without looking at the content from the human perspective. It’s where the multimodal models come in.

We will use the advancements in the large multimodal models and test the performance of the OpenAI GPT4-Turbo on the given task.

Pipeline

DataSet

There are 2 main stages:

- prepare the samples for processing;

- process the samples using the OpenAI API.

Images preparation

Firsthand, the slide images may be obtained by downloading the presentation as separate images in the Canva environment. In the next step, we ought to encode our local pictures to send them to OpenAI API.

OpenAI API

After the input data preparation, we use the OpenAI API ChatCompletion request. In addition, we set the «temperature» to «0» and «detail» to «high» (it makes more sense for slides where we have small font text blocks).

Previously GPT4V was used for this purpose, but when the article is prepared, we have a better option: use the GPT4-Turbo for the vision tasks too.

The following prompt was used to get the ordered text from the slides

You are a helpful assistant. Separate the slide title if it exists. Group all the text blocks from the presentation slide into the subsequent text blocks. The order of the blocks should be preserved like a human have read this presentation.Results

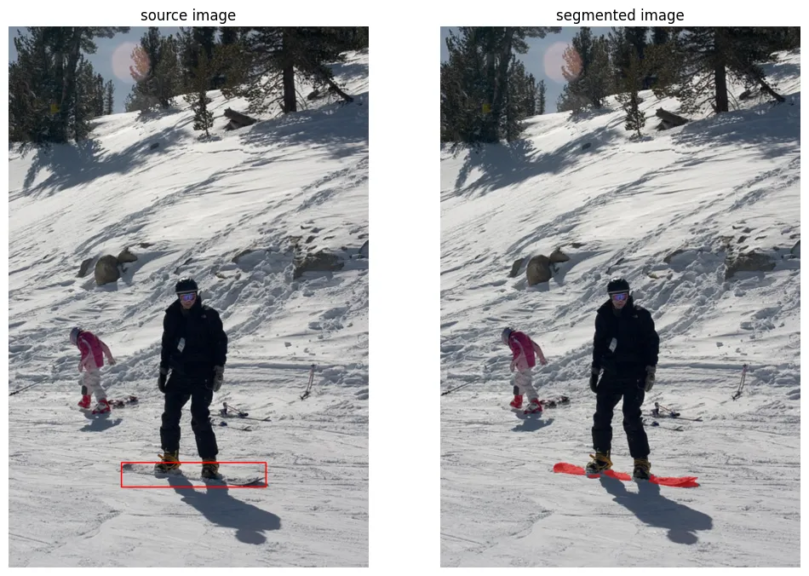

On slide 1 we correctly detected and combined text in the consecutive blocks.

On slide 2 we lacked the title so the model provided its vision of the title (does make sense if we want to follow slide structure). Overall performance is great and the model got this slide.

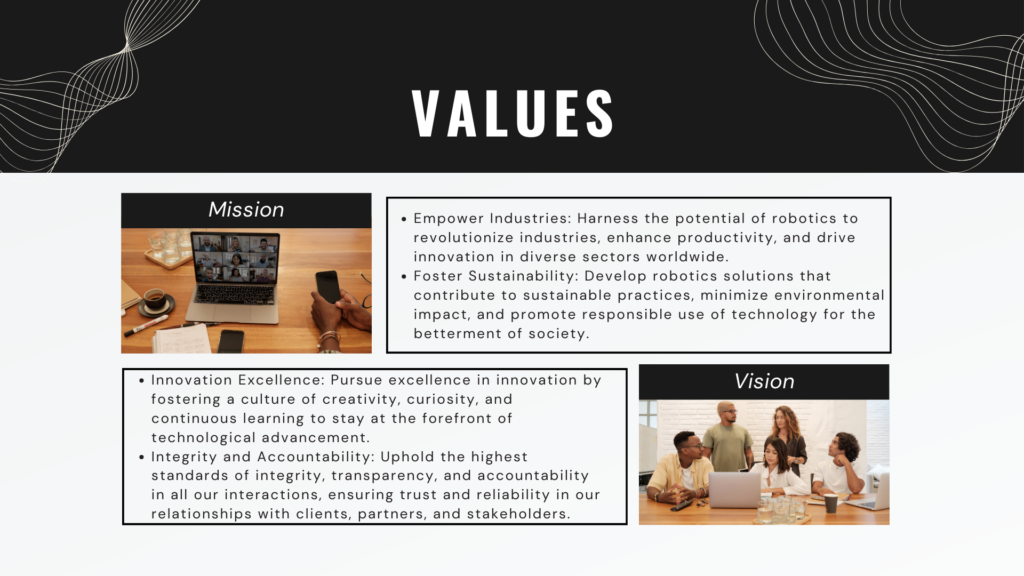

The model correctly detected text blocks and even some text from the pictures, but it got confused with sublines (by changing Values with Vision and swapping Mission and Vision text blocks). Here is the place for additional prompt engineering and extra algorithms on top of this.

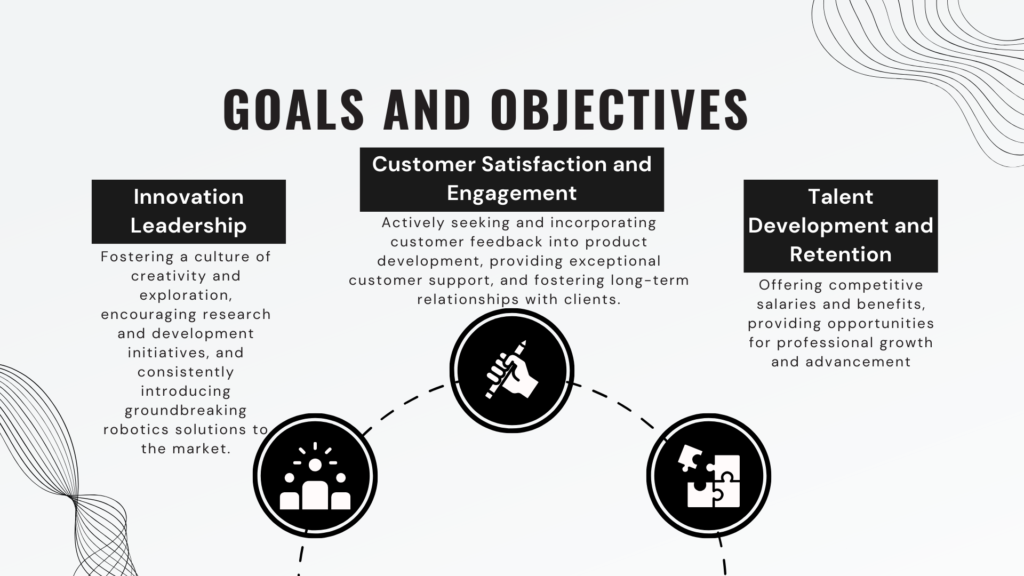

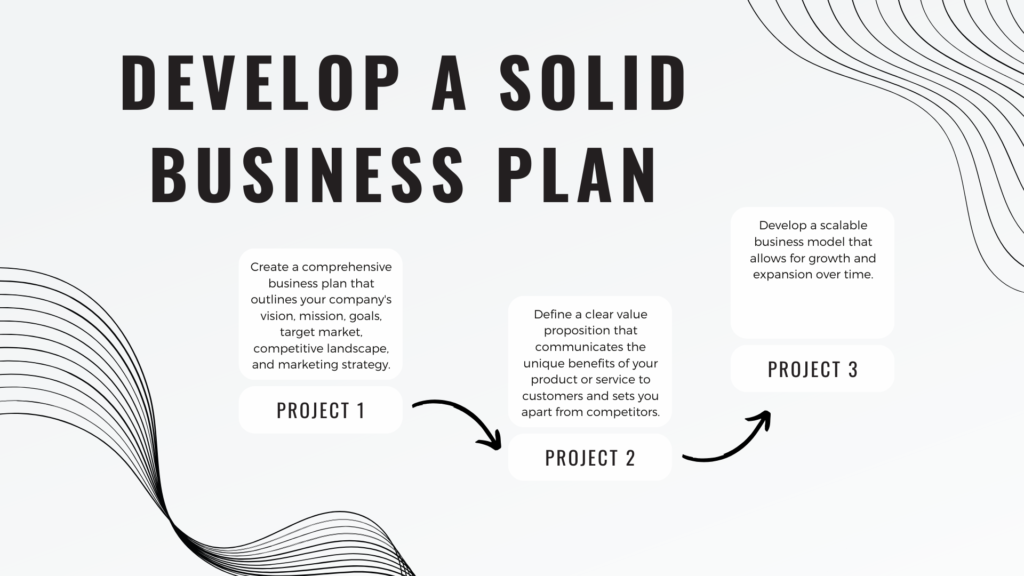

GPT4-Turbo correctly understood the structure and the sequence of the projects on the given slide.

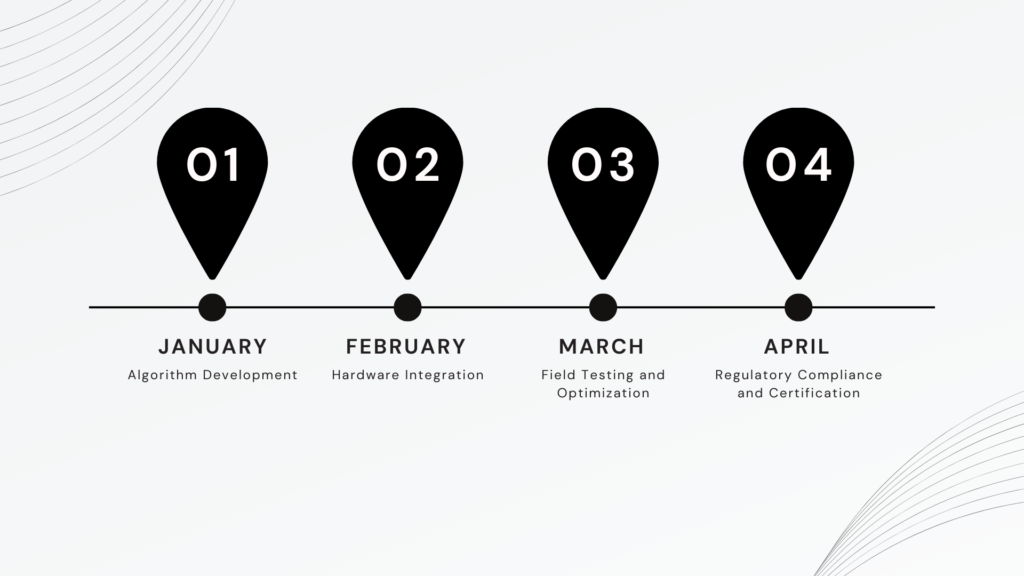

The model handled the transcription of the provided slide without any problems.

Conclusion

The GPT4-Turbo handles the slide transcription process with high quality. This process is not yet perfect, but it’s just a place to start improving. There is plenty of work to reach a production-ready performance and reliability. All the code may be found here. Thanks for reading till the end of the article. Don’t hesitate to share your opinion or ideas on what can be done differently.