Introduction

We needed creative content, and we hit a wall. Not because of a lack of AI tools, but because of a lack of trustworthy comparisons. Every article lists features, but we couldn’t find a single resource that tested the major services with the exact same prompts to show how they actually perform in the real world.

Most reviews test each service with different tasks, making real comparison impossible. Some services might need specially adapted prompts, others work with anything you throw at them. Without side-by-side testing, you can’t know which is which.

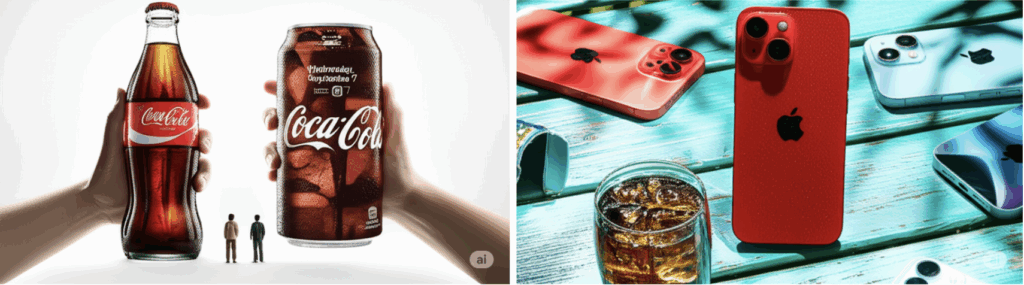

So, we did it ourselves. We took a simple creative challenge — making Apple and Coca-Cola swap visual styles — and ran the exact same prompts through every major AI service we could access. Some we already had subscriptions for (ChatGPT Plus, Gemini Advanced), others we had to pay for (Runway, Midjourney, Pika), and the rest we tested using free tiers.

Some services completely failed the task—maybe they need different prompt structures, maybe they’re just not capable. Others surprised us with quality we didn’t expect from free tiers. You can look at the results and decide for yourself what works for your needs.

Here’s what actually happened.

Why this specific test

We needed something that would reveal real capabilities, not just whether a service can generate “a cat sitting on a chair.” The challenge: take Apple’s minimalist product photography style and apply it to Coca-Cola products, then reverse it.

This tests several things at once:

- Understanding of visual styles

- Text rendering

- Attention to details like reflections and materials

- Consistency across attempts

The testing process

Image generation test

For the image test, we used these reference photos:

Then we used these detailed prompts for all services:

Apple style with Coca-Cola products: “Ultra‑clean Apple‑style studio backdrop, infinite pure white with soft, shadow‑free lighting. Two gigantic drinks replace the phones: a dewy glass Coca‑Cola bottle on the left and an ice‑cold red Coke can on the right. Each drink is styled exactly like an iPhone 14 – matte‑glass texture and dual‑lens–pattern condensation on the bottle, while the can’s glossy front behaves like a screen displaying “9 : 41 Wednesday, September 7” with tiny battery and calendar widgets formed from sparkling cola bubbles. At the base of each drink stands miniature male figures: one wearing a beige oversized sweater, the other in a graphite T‑shirt. Their bodies are tiny – about one‑eighth the height of the drinks—yet each has an oversized, outstretched hand that reaches up to hold the respective bottle or can. The contrast makes their arms appear enormous and elongated while their torsos and heads remain small, evoking the surreal scale play of the original Apple shot.”

Coca-Cola style with iPhone products: “Retro wooden table painted turquoise with weathered texture, sun‑dappled light filtering through leaves. Center stage stands an iPhone 14 (PRODUCT) RED, beads of condensation covering its body like an ice‑cold bottle. The screen faces the viewer and shows the classic lock screen “9:41 Wednesday, September 7”. Around it, several light‑blue iPhone 14 units lie casually, reflecting sunlight the way aluminum Coke cans would. In the foreground a tumbler filled with fizzy cola and large ice cubes; reflections of a glossy phone surface shimmer inside the drink. Keep the warm, nostalgic “feel‑good” vibe of a classic Coca‑Cola ad, 8‑k photorealism, emphasize sparkling highlights and vivid red‑and‑turquoise color palette.”

Video generation test

For video testing, we used these 5-second reference clips:

The video transformation prompts were:

iPhone in Coca-Cola’s style: “Create a video with a slow, cinematic panning shot across a diverse collection of iPhones from different eras, arranged on a clean, bright white surface. The lighting should be bright, diffuse, and celebratory, creating soft reflections on the glass and metal surfaces. The atmosphere is nostalgic, showing the evolution of an iconic product. The video starts with a close-up on a modern iPhone, with light glistening on its surface. The camera then slowly pans left, shifting focus to an older, classic iPhone model in the foreground, showing its iconic design. The shot finally settles on a central composition, with a key iPhone model as the main focus, surrounded by a family of other models from various years. The final shot is a historical tableau of the iPhone’s design evolution.”

Coca-Cola in iPhone’s style: “Create a video set against a pure, pitch-black, infinite background, featuring a dramatic and magical reveal of a single, iconic glass Coca-Cola bottle. The lighting is highly stylized, moody, and premium. The sequence begins in near-total darkness, with only a thin sliver of light tracing the bottle’s iconic curved silhouette. Then, a magical CGI effect begins: dark, effervescent liquid and fizzing bubbles swirl and coalesce out of the darkness, dynamically forming the shape of the bottle. This effect quickly retracts and settles, unveiling the pristine, final Coca-Cola bottle. The final shot holds on the perfectly lit bottle floating motionlessly in the black void, with sophisticated lighting sculpting its famous curves.”

Part 1:

Image generation services

1. DALL-E 3 (OpenAI)

Link: https://openai.com/dall-e-3

Backend Model: DALL-E 3

Subscription Used: Paid $20/month (via ChatGPT Plus)

Input: Text prompts and reference image

Results: DALL-E 3 demonstrates strong prompt adherence, accurately interpreting both complex style swaps with attention to specific details like timestamps, clothing colors, and the unique scale dynamics requested. Excels at text rendering and fine details – significantly better than other models tested. Shows minor element mixing (e.g., adding a Coke glass to the iPhone scene on the bench), but this doesn’t detract from the overall success. Has some issues with the miniature people, but still handles the surreal scale concept reasonably well.

2. Krea AI

Link: https://www.krea.ai

Backend Model: Krea 1

Subscription Used: Free tier with limitations

Input: Text prompt, reference image

Results: Performs significantly worse than DALL-E 3. Similarly mixes elements – adds Coca-Cola products and people to the iPhone bench scene, but much more critically than DALL-E’s subtle addition. For the Apple-style Coca-Cola image somewhat misinterprets the instructions, particularly the scale dynamics and positioning of miniature figures, though still produces a decent attempt.

3. Leonardo AI

Link: https://leonardo.ai

Backend Model: Used Flux (also has Phoenix 1.0, gpt-image-1, lucid realism)

Subscription Used: Free tier (150 tokens daily)

Input: Text prompt and reference image

Results: Leonardo AI Flux shows good performance, particularly excelling at the miniature people concept where other models struggled. Perfectly executes the surreal scale dynamics with tiny figures having oversized hands reaching up to hold the products – exactly as requested. Overall handles both style swaps well, though shows some inaccuracies with smaller details.

4. Imagen 4 (Google Gemini)

Link: https://gemini.google.com

Backend Model: Imagen 4

Subscription Used: Paid $20/month

Input: Text prompt and reference image

Results: Gemini performs reasonably well but again misunderstands the Apple-style Coca-Cola concept. The miniature figures are present, but aren’t executed as requested. Shows text rendering defects on the can and bottle, as well as a notable camera module defect on the iPhone.

5. Runway ML

Link: https://runwayml.com

Backend Model: Gen-4

Subscription Used: Paid $10/month

Input: Text prompt and reference image

Results: Runway produces notably strange renderings with significant quality issues. The Coca-Cola style scene captures the turquoise table but the phones look abnormal with condensation effects. For the Apple-style image, while it attempts the miniature people concept, the figures appear distorted and unnatural.

6. Stable Diffusion

Link: https://stablediffusionweb.com

Backend Model: Stable Diffusion XL

Subscription Used: Free

Input: Text prompt, reference image

Results: Stable Diffusion shows decent attention to details but suffers from multiple anomalies. The iPhones display significant camera module defects and distortions. For the Apple-style Coca-Cola image, completely misinterprets the miniature people concept – instead of tiny figures with oversized hands reaching up, it shows multiple normal-sized figures holding the products. Additionally, the human figures have severe anatomical issues with 6-7 fingers per hand

7. Midjourney

Link: https://midjourney.com

Backend Model: Midjourney v7

Subscription Used: Paid $10/month

Input: Text prompt, reference image

Results: Midjourney shows strong attention to visual details and rendering quality, but fails to properly execute the style swap concept. Mixes iPhones and Coca-Cola products on the turquoise bench rather than transforming one into the other’s style. For the Apple-style image, completely misses the miniature people with oversized hands concept – just shows regular hands holding products. Has notable text rendering issues.

8. Luma AI

Link: https://dream-machine.lumalabs.ai/

Backend Model: Photon

Subscription Used: Paid $10/month

Input: Text prompt and reference image

Results: Luma AI delivers mediocre performance overall. Handles the iPhone Coca-Cola style reasonably well – captures the turquoise table and condensation effects, though oddly places the “9:41 Wednesday September 7” text on the phone’s back rather than the screen. The Apple-style Coca-Cola attempt is particularly weak – completely misses the oversized hands concept.

9. Adobe Firefly

Link: https://firefly.adobe.com

Backend Model: Adobe Firefly

Subscription Used: Free tier

Input: Text prompt and reference image

Results: Adobe Firefly performs the worst among all models tested. Significant text rendering problems. Fails to execute meaningful style transformation.

Part 2:

Video generation services

1. Sora (OpenAI)

Link: https://openai.com/sora

Backend Model: Sora

Subscription Used: Paid $20/month (via ChatGPT Plus)

Input Requirements: Text prompt, full reference video

Results: Only service that could accept and transform complete videos. The style transfer was remarkably accurate – it understood the essence of each brand’s visual language. Quality remained broadcast-ready throughout.

2. Veo3 (Google)

Link: https://gemini.google/overview/video-generation/

Backend Model: Veo3

Subscription Used: Paid $20/month (Gemini Advanced)

Input Requirements: Text prompt or first frame only – no full video input

Results: Excellent visual quality with the unique addition of synchronized sound effects.

3. Runway (Gen-3 and Gen-4)

Link: https://runwayml.com

Backend Model: Gen-4/Turbo

Subscription Used: Paid $10/month (Standard plan = 625 credits), Gen-4: 12 credits/sec

Input Requirements: Gen-4: First frame and text prompt

Results: Good visual quality. But at 12 credits per second, experimentation gets expensive quickly.

4. Pika

Link: https://pika.art

Backend Model: Pika 2.2

Subscription Used: Paid $10/month

Input Requirements: Text prompt, first image, keyframe support

Results: Decent motion understanding but struggled with the brand transformation concept. The prompts would need significant refinement to yield better results.

5. Midjourney Video

Link: https://midjourney.com

Backend Model: Experimental video features

Subscription Used: Same $10/month plan

Input Requirements: Only Midjourney-generated images

6. Luma Dream Machine

Link: https://dream-machine.lumalabs.ai/

Backend Model: Ray2

Subscription Used: Paid $10/month

Input Requirements: Text prompt, start/end images, or video upload

Part 3:

Advanced Test

Multi-Scene Narrative Consistency

After the brand swap test, we decided to test something more ambitious: could these tools maintain character and environment consistency across a complete 5-scene narrative?

The scenario: “A chrome-skinned humanoid robot with glowing blue LED eyes sits poised behind a minimalist glass desk in a bright, futuristic office that overlooks a bustling skyline. A nervous young professional in a navy suit steps through the glass door, approaches the desk, and takes a seat opposite the robot. As the interview begins, the robot leans forward, its eyes pulsing while a holographic display hovers beside it, updating with colorful graphs and metrics that rise steadily as the candidate speaks with growing confidence. When the questions conclude, the hologram flares to display five golden stars and the words ‘PERFECT MATCH,’ bathing the room in celebratory light, and the robot stands to offer a firm, metallic handshake that the smiling candidate eagerly returns.”

There are specialized services for this kind of work (Katalist.ai, Storyboarder.ai, boords.com), but they lack APIs and we wanted to see how mainstream tools would handle it.

The Approach

Our first attempt used the last frame from each video as the starting point for the next scene. This failed completely – the AI would distort elements and add jarring new details that broke continuity.

The solution: generate a fresh starting image for each scene based on detailed prompts, then use that for video generation. This maintained much better consistency.

Testing Process

We broke the narrative into 5 scenes:

- Robot waiting in office

- Candidate enters

- Hologram shows rising metrics

- “Perfect Match” celebration

- Handshake

We tested this with Sora and Runway Gen-4, as they showed the most promise in earlier tests.

Results

Sora:

Runway Gen-4:

Looking at results, we found Runway Gen-4 easier to work with for achieving something usable. Sora tends to hallucinate more frequently, adding elements we didn’t ask for or interpreting prompts in unexpected ways. Though we have to admit, when Sora gets it right, the detail and cinematic quality are noticeably better than Runway.

Multi-scene conclusions

We’ll be honest – we didn’t spend hours perfecting prompts for each scene. This was more of a quick test to see baseline capabilities. With more detailed prompts and careful iteration, results would certainly improve.

What’s clear is that maintaining consistency across multiple scenes remains challenging for these general-purpose tools. Let viewers judge for themselves which results they prefer. Both services show promise, but also reveal how far we still have to go for truly consistent AI-generated narratives.

Final Thoughts

These tools are simultaneously impressive and frustrating. They’ll create impossible things then fail at basic tasks. The same service might be perfect for one use case and useless for another.

The test indicates DALL-E 3 is highly effective with complex, narrative-style prompts, whereas Midjourney seems to require a more structured, style-first approach to avoid ‘mixing’ elements.

Overall, DALL-E 3 performed best for complex static image prompt adherence, while Sora and Veo3 showed the highest quality in video.

Success often comes down to finding the right model, understanding hidden limitations, or accepting unique workflows.

Most importantly: budget more time and money than expected. Between learning curves, failed generations, and iteration, nothing is as quick or cheap as advertised.