Why Bots Still Need Browsers

In B2B sales data collection is crucial. Lead generation requires digging up profiles, checking if they’re a good fit, and writing personalized messages every day – that can be exhausting. No wonder the idea of automating all that is so tempting — it promises to take the boring, repetitive stuff off your plate so you can focus on what matters.

The answer must be in AI, an automation assistant that finds, qualifies, and connects with leads — on your behalf.

Our vision was simple: feed the system potential contacts, and it would autonomously find their true profiles, qualify them, and initiate personalized connections. It seemed straightforward. Yet, the journey revealed technical complexities and limitations of even the most advanced AI agents.

Why AI Agents Weren’t Enough

Our first instinct was to throw AI at the problem. Could we use an LLM agent like CrewAI to visit profiles, extract details, and qualify leads?

Technically yes — but here’s the catch:

The core of the problem lies in how modern web applications are built. We encountered a fundamental disconnect between what an AI agent “sees” and what a human user experiences in the browser. This discrepancy boils down to the concept of hydration.

Imagine a website as a two-layered cake. The initial HTML delivered to the browser is the bare structure – the empty layers. This static HTML often contains semantic tags like <main>, <h1>, and <section>, providing a basic outline of the content. However, the actual frosting and filling – the dynamic content we interact with – are rendered client-side using JavaScript frameworks like React or Vue after the initial HTML is loaded.

This is where AI agents fail. They treat the HTML as plain text, oblivious to the JavaScript execution that brings the page to life. Consequently, when faced with a modern web application, especially after logging in, an LLM agent often sees only the empty containers – a <div id=”root”> with a multitude of meaningless, dynamically generated class names like a5gh2r2v. The valuable inner text, the actual content of the profile, remains invisible.

This fundamental limitation meant that our AI agents were effectively blind within the very environments they needed to navigate. They could parse static HTML with ease, but they were lost when confronted with the dynamic content of logged-in interfaces – the very platforms where valuable B2B contacts reside.

The Browser as the Eye

Realizing that AI couldn’t perceive the web as a user does, we moved to a more robust solution: browser automation. By leveraging tools like Playwright, we could instantiate a browser environment complete with a JavaScript engine. This allowed our automation to:

- Execute JavaScript: Rendering the dynamic content and making the “invisible” visible.

- Wait for elements to appear: Handling asynchronous loading of data.

- Mimic human behavior: Enabling interactions like clicks, scrolls, and deliberate delays.

- Seamlessly interact with React/Vue/SPAs: Navigating and extracting data from modern web applications.

The key insight was recognizing the two distinct layers of modern websites: the lightweight, SEO-optimized HTML skeleton (visible to AI) and the JavaScript-rendered content (visible only to a real browser). For dynamic platforms, a true browser was not just an alternative, it was a necessity.

Fighting Bot Detection

However, simply using a browser wasn’t the end of our challenges. Modern platforms are heavily fortified against automated behavior. Ignoring these bot detection mechanisms inevitably leads to temporary account locks, verification requests, and, potentially, bans. To build a reliable LeadGen Agent, we had to become experts in evading these digital gatekeepers.

Our approach involved a series of countermeasures designed to make our automated browser sessions appear indistinguishable from genuine human activity.

Imitating a Real Browser

The default “headless” mode of Chromium, often used in automation, carries signs of being automated. We moved away from this and opted for launching a full Chrome browser on the user’s system. This seemingly simple change brought significant advantages:

- Real GPU Rendering: Utilizing the actual graphics processing unit, unlike the software rendering often detected in headless environments.

- Actual Plugin Stack: Presenting a realistic set of browser plugins.

- System Language: Matching the user’s operating system language.

- Headed Mode (with GUI): Running the browser with a visible graphical interface, as most bots operate silently in the background.

- Manual User-Agent String: Precisely matching the user-agent string of Chrome on macOS, as inconsistencies between this, cookies, and WebGL are red flags.

Fingerprint Synchronization

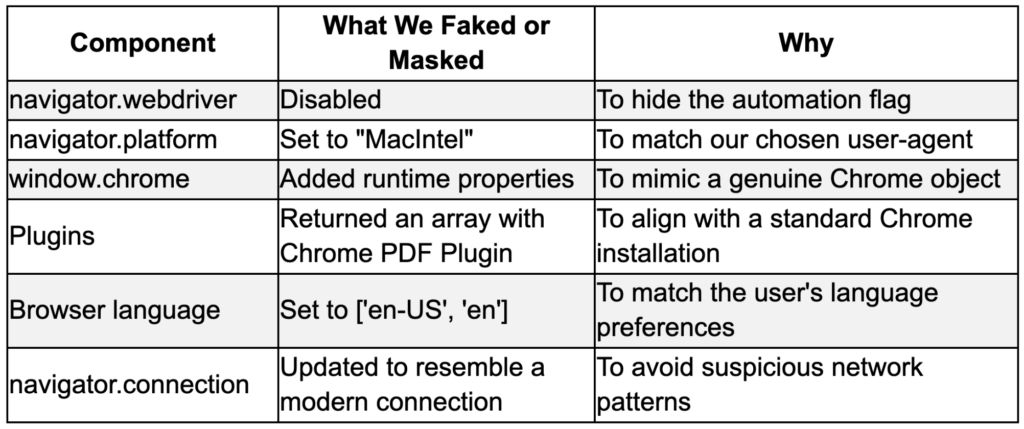

Platforms don’t rely solely on cookies or login data. Browser fingerprinting analyzes a multitude of browser parameters to assess the “humanity” of a session. We meticulously faked or masked several key components:

Simulating the Referer

The HTTP Referer header provides information about the previous page the user visited. Real users rarely land directly on a page; they typically arrive from search engines, profile clicks, or social media feeds. A missing or inconsistent Referer can be a strong indicator of automated activity. We manually set realistic Referer headers for every request, mimicking natural browsing patterns.

Human-Like Behavior

To further blend in, we introduced subtle variations in our automation:

- Gradual Scrolling: Scrolling pages in chunks, mimicking human reading speed.

- Character-by-Character Typing: Simulating the act of typing messages with realistic delays between keystrokes.

Delays Between Actions: Introducing delays between steps, drawn from a normal distribution.

Conclusion

The development of LeadGen Agent proved to be a profound learning experience. What initially seemed like a straightforward automation task – replicating repeatable browser actions – demanded a deep understanding of modern web technologies, browser environments, and the tactics of bot detection systems.

Our journey revealed crucial lessons:

- LLM agents, in their current state, have inherent limitations when dealing with dynamic web content and cannot fully replace the functionality of a complete browser.

- Bot detection is a sophisticated, multi-layered defense mechanism that analyzes everything from the WebGL renderer to subtle nuances in user interaction.

- Achieving stable and reliable automation on modern web platforms requires meticulous simulation of actual human behavior across every detail.

Ultimately, building effective lead generation automation for today’s web requires more than just intelligent agents. It demands the power of a real browser coupled with a nuanced understanding of how to mimic the subtle actions of a human user. The illusion of pure AI automation in this domain is just that – an illusion. The reality is a complex interplay between artificial intelligence and the art of digital mimicry.

You can find this article published on Medium. It was written by our team members, Anastasiia Mostova and Andy Bosyi.